Seminar Recap: NLP SIG Monthly Research Event – Large Language Models Focus

Abstract

We are excited to bring together experts in the field to share their insights and recent developments in Large Language Models (LLMs).

We are delighted to share that our NLP SIG seminar on Large Language Models (LLMs), held on the 3rd of November, was a notable gathering, underscored by the presence of two luminaries in the field.

Distinguished Speakers:

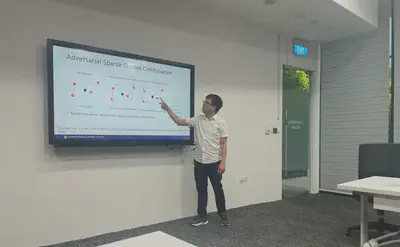

- Anh Tuan Luu: A venerated authority with a storied career from postdoctoral research at MIT to impactful roles in NLP at NTU and I2R, Luu is at the forefront of fortifying the robustness of AI systems.

- Wenya Wang: A trailblazing researcher whose postdoctoral work at the University of Washington has given rise to innovative methodologies for enhancing the scalability and reasoning capabilities of AI.

Highlights from the Talks:

Anh Tuan Luu offered a deep dive into the resilience of AI models in NLP, spotlighting pioneering work in protecting AI infrastructures against adversarial encroachments to bolster their reliability and trustworthiness.

Wenya Wang confronted the inherent challenges of hallucinations, scalability, and adaptability in modern LLMs like GPT-4. Her avant-garde research introduces more sophisticated, capable language models primed for commonsense reasoning and swift adaptation across a spectrum of NLP tasks.

Interactive Q&A Session:

The talks were followed by a vibrant interactive Q&A session, allowing attendees to engage directly with the speakers, fostering a rich exchange of ideas and clarifying complex topics in real-time.